The Fast Fourier Transform Chapitre 12 - Analog Devices

- Revenir à l'accueil

Farnell Element 14 :

See the trailer for the next exciting episode of The Ben Heck show. Check back on Friday to be among the first to see the exclusive full show on element…

Connect your Raspberry Pi to a breadboard, download some code and create a push-button audio play project.

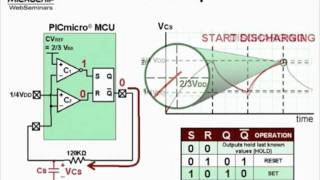

Puce électronique / Microchip :

Sans fil - Wireless :

Texas instrument :

Ordinateurs :

Logiciels :

Tutoriels :

Autres documentations :

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NA555-NE555-..> 08-Sep-2014 07:33 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD9834-Rev-D..> 08-Sep-2014 07:32 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MSP430F15x-M..> 08-Sep-2014 07:32 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD736-Rev-I-..> 08-Sep-2014 07:31 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD8307-Data-..> 08-Sep-2014 07:30 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Single-Chip-..> 08-Sep-2014 07:30 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Quadruple-2-..> 08-Sep-2014 07:29 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ADE7758-Rev-..> 08-Sep-2014 07:28 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MAX3221-Rev-..> 08-Sep-2014 07:28 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-USB-to-Seria..> 08-Sep-2014 07:27 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD8313-Analo..> 08-Sep-2014 07:26 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SN54HC164-SN..> 08-Sep-2014 07:25 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD8310-Analo..> 08-Sep-2014 07:24 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD8361-Rev-D..> 08-Sep-2014 07:23 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-2N3906-Fairc..> 08-Sep-2014 07:22 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD584-Rev-C-..> 08-Sep-2014 07:20 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ADE7753-Rev-..> 08-Sep-2014 07:20 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TLV320AIC23B..> 08-Sep-2014 07:18 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD586BRZ-Ana..> 08-Sep-2014 07:17 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-STM32F405xxS..> 27-Aug-2014 18:27 1.8M

Farnell-MSP430-Hardw..> 29-Jul-2014 10:36 1.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LM324-Texas-..> 29-Jul-2014 10:32 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LM386-Low-Vo..> 29-Jul-2014 10:32 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NE5532-Texas..> 29-Jul-2014 10:32 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Hex-Inverter..> 29-Jul-2014 10:31 875K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AT90USBKey-H..> 29-Jul-2014 10:31 902K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AT89C5131-Ha..> 29-Jul-2014 10:31 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MSP-EXP430F5..> 29-Jul-2014 10:31 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Explorer-16-..> 29-Jul-2014 10:31 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMP006EVM-Us..> 29-Jul-2014 10:30 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Gertboard-Us..> 29-Jul-2014 10:30 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LMP91051-Use..> 29-Jul-2014 10:30 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Thermometre-..> 29-Jul-2014 10:30 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-user-manuel-..> 29-Jul-2014 10:29 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-fx-3650P-fx-..> 29-Jul-2014 10:29 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-2-GBPS-Diffe..> 28-Jul-2014 17:42 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LMT88-2.4V-1..> 28-Jul-2014 17:42 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Octal-Genera..> 28-Jul-2014 17:42 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Dual-MOSFET-..> 28-Jul-2014 17:41 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TLV320AIC325..> 28-Jul-2014 17:41 2.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SN54LV4053A-..> 28-Jul-2014 17:20 5.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TAS1020B-USB..> 28-Jul-2014 17:19 6.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TPS40060-Wid..> 28-Jul-2014 17:19 6.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TL082-Wide-B..> 28-Jul-2014 17:16 6.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-RF-short-tra..> 28-Jul-2014 17:16 6.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-maxim-integr..> 28-Jul-2014 17:14 6.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TSV6390-TSV6..> 28-Jul-2014 17:14 6.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Fast-Charge-..> 28-Jul-2014 17:12 6.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NVE-datashee..> 28-Jul-2014 17:12 6.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Excalibur-Hi..> 28-Jul-2014 17:10 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Excalibur-Hi..> 28-Jul-2014 17:10 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-REF102-10V-P..> 28-Jul-2014 17:09 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMS320F28055..> 28-Jul-2014 17:09 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MULTICOMP-Ra..> 22-Jul-2014 12:35 5.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-RASPBERRY-PI..> 22-Jul-2014 12:35 5.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Dremel-Exper..> 22-Jul-2014 12:34 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-STM32F103x8-..> 22-Jul-2014 12:33 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BD6xxx-PDF.htm 22-Jul-2014 12:33 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-L78S-STMicro..> 22-Jul-2014 12:32 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-RaspiCam-Doc..> 22-Jul-2014 12:32 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SB520-SB5100..> 22-Jul-2014 12:32 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-iServer-Micr..> 22-Jul-2014 12:32 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LUMINARY-MIC..> 22-Jul-2014 12:31 3.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEXAS-INSTRU..> 22-Jul-2014 12:31 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEXAS-INSTRU..> 22-Jul-2014 12:30 4.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CLASS 1-or-2..> 22-Jul-2014 12:30 4.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEXAS-INSTRU..> 22-Jul-2014 12:29 4.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Evaluating-t..> 22-Jul-2014 12:28 4.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LM3S6952-Mic..> 22-Jul-2014 12:27 5.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Keyboard-Mou..> 22-Jul-2014 12:27 5.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif) Farnell-Full-Datashe..> 15-Jul-2014 17:08 951K

Farnell-Full-Datashe..> 15-Jul-2014 17:08 951K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-pmbta13_pmbt..> 15-Jul-2014 17:06 959K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-EE-SPX303N-4..> 15-Jul-2014 17:06 969K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Datasheet-NX..> 15-Jul-2014 17:06 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Datasheet-Fa..> 15-Jul-2014 17:05 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MIDAS-un-tra..> 15-Jul-2014 17:05 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SERIAL-TFT-M..> 15-Jul-2014 17:05 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MCOC1-Farnel..> 15-Jul-2014 17:05 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMR-2-series..> 15-Jul-2014 16:48 787K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DC-DC-Conver..> 15-Jul-2014 16:48 781K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Full-Datashe..> 15-Jul-2014 16:47 803K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMLM-Series-..> 15-Jul-2014 16:47 810K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEL-5-Series..> 15-Jul-2014 16:47 814K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TXL-series-t..> 15-Jul-2014 16:47 829K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEP-150WI-Se..> 15-Jul-2014 16:47 837K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AC-DC-Power-..> 15-Jul-2014 16:47 845K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TIS-Instruct..> 15-Jul-2014 16:47 845K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TOS-tracopow..> 15-Jul-2014 16:47 852K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TCL-DC-traco..> 15-Jul-2014 16:46 858K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TIS-series-t..> 15-Jul-2014 16:46 875K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMR-2-Series..> 15-Jul-2014 16:46 897K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TMR-3-WI-Ser..> 15-Jul-2014 16:46 939K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEN-8-WI-Ser..> 15-Jul-2014 16:46 939K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Full-Datashe..> 15-Jul-2014 16:46 947K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-HIP4081A-Int..> 07-Jul-2014 19:47 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ISL6251-ISL6..> 07-Jul-2014 19:47 1.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DG411-DG412-..> 07-Jul-2014 19:47 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-3367-ARALDIT..> 07-Jul-2014 19:46 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ICM7228-Inte..> 07-Jul-2014 19:46 1.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Data-Sheet-K..> 07-Jul-2014 19:46 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Silica-Gel-M..> 07-Jul-2014 19:46 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TKC2-Dusters..> 07-Jul-2014 19:46 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CRC-HANDCLEA..> 07-Jul-2014 19:46 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-760G-French-..> 07-Jul-2014 19:45 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Decapant-KF-..> 07-Jul-2014 19:45 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-1734-ARALDIT..> 07-Jul-2014 19:45 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Araldite-Fus..> 07-Jul-2014 19:45 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-fiche-de-don..> 07-Jul-2014 19:44 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-safety-data-..> 07-Jul-2014 19:44 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-A-4-Hardener..> 07-Jul-2014 19:44 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CC-Debugger-..> 07-Jul-2014 19:44 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MSP430-Hardw..> 07-Jul-2014 19:43 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SmartRF06-Ev..> 07-Jul-2014 19:43 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CC2531-USB-H..> 07-Jul-2014 19:43 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Alimentation..> 07-Jul-2014 19:43 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BK889B-PONT-..> 07-Jul-2014 19:42 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-User-Guide-M..> 07-Jul-2014 19:41 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-T672-3000-Se..> 07-Jul-2014 19:41 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif) Farnell-0050375063-D..> 18-Jul-2014 17:03 2.5M

Farnell-0050375063-D..> 18-Jul-2014 17:03 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Mini-Fit-Jr-..> 18-Jul-2014 17:03 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-43031-0002-M..> 18-Jul-2014 17:03 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-0433751001-D..> 18-Jul-2014 17:02 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Cube-3D-Prin..> 18-Jul-2014 17:02 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MTX-Compact-..> 18-Jul-2014 17:01 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MTX-3250-MTX..> 18-Jul-2014 17:01 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATtiny26-L-A..> 18-Jul-2014 17:00 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MCP3421-Micr..> 18-Jul-2014 17:00 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LM19-Texas-I..> 18-Jul-2014 17:00 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Data-Sheet-S..> 18-Jul-2014 17:00 1.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LMH6518-Texa..> 18-Jul-2014 16:59 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD7719-Low-V..> 18-Jul-2014 16:59 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DAC8143-Data..> 18-Jul-2014 16:59 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BGA7124-400-..> 18-Jul-2014 16:59 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SICK-OPTIC-E..> 18-Jul-2014 16:58 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LT3757-Linea..> 18-Jul-2014 16:58 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LT1961-Linea..> 18-Jul-2014 16:58 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PIC18F2420-2..> 18-Jul-2014 16:57 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DS3231-DS-PD..> 18-Jul-2014 16:57 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-RDS-80-PDF.htm 18-Jul-2014 16:57 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD8300-Data-..> 18-Jul-2014 16:56 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LT6233-Linea..> 18-Jul-2014 16:56 1.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MAX1365-MAX1..> 18-Jul-2014 16:56 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-XPSAF5130-PD..> 18-Jul-2014 16:56 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DP83846A-DsP..> 18-Jul-2014 16:55 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Dremel-Exper..> 18-Jul-2014 16:55 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MCOC1-Farnel..> 16-Jul-2014 09:04 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SL3S1203_121..> 16-Jul-2014 09:04 1.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PN512-Full-N..> 16-Jul-2014 09:03 1.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SL3S4011_402..> 16-Jul-2014 09:03 1.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LPC408x-7x 3..> 16-Jul-2014 09:03 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PCF8574-PCF8..> 16-Jul-2014 09:03 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LPC81xM-32-b..> 16-Jul-2014 09:02 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LPC1769-68-6..> 16-Jul-2014 09:02 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Download-dat..> 16-Jul-2014 09:02 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LPC3220-30-4..> 16-Jul-2014 09:02 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LPC11U3x-32-..> 16-Jul-2014 09:01 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SL3ICS1002-1..> 16-Jul-2014 09:01 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-T672-3000-Se..> 08-Jul-2014 18:59 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-tesa®pack63..> 08-Jul-2014 18:56 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Encodeur-USB..> 08-Jul-2014 18:56 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CC2530ZDK-Us..> 08-Jul-2014 18:55 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-2020-Manuel-..> 08-Jul-2014 18:55 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Synchronous-..> 08-Jul-2014 18:54 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Arithmetic-L..> 08-Jul-2014 18:54 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NA555-NE555-..> 08-Jul-2014 18:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-4-Bit-Magnit..> 08-Jul-2014 18:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LM555-Timer-..> 08-Jul-2014 18:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-L293d-Texas-..> 08-Jul-2014 18:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SN54HC244-SN..> 08-Jul-2014 18:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MAX232-MAX23..> 08-Jul-2014 18:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-High-precisi..> 08-Jul-2014 18:51 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SMU-Instrume..> 08-Jul-2014 18:51 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-900-Series-B..> 08-Jul-2014 18:50 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BA-Series-Oh..> 08-Jul-2014 18:50 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-UTS-Series-S..> 08-Jul-2014 18:49 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-270-Series-O..> 08-Jul-2014 18:49 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-UTS-Series-S..> 08-Jul-2014 18:49 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Tiva-C-Serie..> 08-Jul-2014 18:49 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-UTO-Souriau-..> 08-Jul-2014 18:48 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Clipper-Seri..> 08-Jul-2014 18:48 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SOURIAU-Cont..> 08-Jul-2014 18:47 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-851-Series-P..> 08-Jul-2014 18:47 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif) Farnell-SL59830-Inte..> 06-Jul-2014 10:07 1.0M

Farnell-SL59830-Inte..> 06-Jul-2014 10:07 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ALF1210-PDF.htm 06-Jul-2014 10:06 4.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD7171-16-Bi..> 06-Jul-2014 10:06 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Low-Noise-24..> 06-Jul-2014 10:05 1.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ESCON-Featur..> 06-Jul-2014 10:05 938K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-74LCX573-Fai..> 06-Jul-2014 10:05 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-1N4148WS-Fai..> 06-Jul-2014 10:04 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-FAN6756-Fair..> 06-Jul-2014 10:04 850K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Datasheet-Fa..> 06-Jul-2014 10:04 861K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ES1F-ES1J-fi..> 06-Jul-2014 10:04 867K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-QRE1113-Fair..> 06-Jul-2014 10:03 879K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-2N7002DW-Fai..> 06-Jul-2014 10:03 886K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-FDC2512-Fair..> 06-Jul-2014 10:03 886K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-FDV301N-Digi..> 06-Jul-2014 10:03 886K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-S1A-Fairchil..> 06-Jul-2014 10:03 896K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BAV99-Fairch..> 06-Jul-2014 10:03 896K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-74AC00-74ACT..> 06-Jul-2014 10:03 911K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NaPiOn-Panas..> 06-Jul-2014 10:02 911K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LQ-RELAYS-AL..> 06-Jul-2014 10:02 924K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ev-relays-ae..> 06-Jul-2014 10:02 926K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ESCON-Featur..> 06-Jul-2014 10:02 931K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Amplifier-In..> 06-Jul-2014 10:02 940K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Serial-File-..> 06-Jul-2014 10:02 941K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Both-the-Del..> 06-Jul-2014 10:01 948K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Videk-PDF.htm 06-Jul-2014 10:01 948K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-EPCOS-173438..> 04-Jul-2014 10:43 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Sensorless-C..> 04-Jul-2014 10:42 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-197.31-KB-Te..> 04-Jul-2014 10:42 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PIC12F609-61..> 04-Jul-2014 10:41 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PADO-semi-au..> 04-Jul-2014 10:41 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-03-iec-runds..> 04-Jul-2014 10:40 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ACC-Silicone..> 04-Jul-2014 10:40 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Series-TDS10..> 04-Jul-2014 10:39 4.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-03-iec-runds..> 04-Jul-2014 10:40 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-0430300011-D..> 14-Jun-2014 18:13 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-06-6544-8-PD..> 26-Mar-2014 17:56 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-3M-Polyimide..> 21-Mar-2014 08:09 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-3M-VolitionT..> 25-Mar-2014 08:18 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-10BQ060-PDF.htm 14-Jun-2014 09:50 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-10TPB47M-End..> 14-Jun-2014 18:16 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-12mm-Size-In..> 14-Jun-2014 09:50 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-24AA024-24LC..> 23-Jun-2014 10:26 3.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-50A-High-Pow..> 20-Mar-2014 17:31 2.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-197.31-KB-Te..> 04-Jul-2014 10:42 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-1907-2006-PD..> 26-Mar-2014 17:56 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-5910-PDF.htm 25-Mar-2014 08:15 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-6517b-Electr..> 29-Mar-2014 11:12 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-A-True-Syste..> 29-Mar-2014 11:13 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ACC-Silicone..> 04-Jul-2014 10:40 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AD524-PDF.htm 20-Mar-2014 17:33 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ADL6507-PDF.htm 14-Jun-2014 18:19 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ADSP-21362-A..> 20-Mar-2014 17:34 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ALF1210-PDF.htm 04-Jul-2014 10:39 4.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ALF1225-12-V..> 01-Apr-2014 07:40 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ALF2412-24-V..> 01-Apr-2014 07:39 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-AN10361-Phil..> 23-Jun-2014 10:29 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ARADUR-HY-13..> 26-Mar-2014 17:55 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ARALDITE-201..> 21-Mar-2014 08:12 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ARALDITE-CW-..> 26-Mar-2014 17:56 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATMEL-8-bit-..> 19-Mar-2014 18:04 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATMEL-8-bit-..> 11-Mar-2014 07:55 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATmega640-VA..> 14-Jun-2014 09:49 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATtiny20-PDF..> 25-Mar-2014 08:19 3.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ATtiny26-L-A..> 13-Jun-2014 18:40 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Alimentation..> 14-Jun-2014 18:24 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Alimentation..> 01-Apr-2014 07:42 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Amplificateu..> 29-Mar-2014 11:11 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-An-Improved-..> 14-Jun-2014 09:49 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Atmel-ATmega..> 19-Mar-2014 18:03 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Avvertenze-e..> 14-Jun-2014 18:20 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BC846DS-NXP-..> 13-Jun-2014 18:42 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BC847DS-NXP-..> 23-Jun-2014 10:24 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BF545A-BF545..> 23-Jun-2014 10:28 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BK2650A-BK26..> 29-Mar-2014 11:10 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BT151-650R-N..> 13-Jun-2014 18:40 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BTA204-800C-..> 13-Jun-2014 18:42 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BUJD203AX-NX..> 13-Jun-2014 18:41 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BYV29F-600-N..> 13-Jun-2014 18:42 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BYV79E-serie..> 10-Mar-2014 16:19 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-BZX384-serie..> 23-Jun-2014 10:29 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Battery-GBA-..> 14-Jun-2014 18:13 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-C.A-6150-C.A..> 14-Jun-2014 18:24 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-C.A 8332B-C...> 01-Apr-2014 07:40 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CC2560-Bluet..> 29-Mar-2014 11:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CD4536B-Type..> 14-Jun-2014 18:13 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CIRRUS-LOGIC..> 10-Mar-2014 17:20 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-CS5532-34-BS..> 01-Apr-2014 07:39 3.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Cannon-ZD-PD..> 11-Mar-2014 08:13 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Ceramic-tran..> 14-Jun-2014 18:19 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Circuit-Note..> 26-Mar-2014 18:00 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Circuit-Note..> 26-Mar-2014 18:00 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Cles-electro..> 21-Mar-2014 08:13 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Conception-d..> 11-Mar-2014 07:49 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Connectors-N..> 14-Jun-2014 18:12 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Construction..> 14-Jun-2014 18:25 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Controle-de-..> 11-Mar-2014 08:16 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Cordless-dri..> 14-Jun-2014 18:13 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Current-Tran..> 26-Mar-2014 17:58 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Current-Tran..> 26-Mar-2014 17:58 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Current-Tran..> 26-Mar-2014 17:59 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Current-Tran..> 26-Mar-2014 17:59 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DC-Fan-type-..> 14-Jun-2014 09:48 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-DC-Fan-type-..> 14-Jun-2014 09:51 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Davum-TMC-PD..> 14-Jun-2014 18:27 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-De-la-puissa..> 29-Mar-2014 11:10 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Directive-re..> 25-Mar-2014 08:16 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Documentatio..> 14-Jun-2014 18:26 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Download-dat..> 13-Jun-2014 18:40 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ECO-Series-T..> 20-Mar-2014 08:14 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ELMA-PDF.htm 29-Mar-2014 11:13 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-EMC1182-PDF.htm 25-Mar-2014 08:17 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-EPCOS-173438..> 04-Jul-2014 10:43 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-EPCOS-Sample..> 11-Mar-2014 07:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ES2333-PDF.htm 11-Mar-2014 08:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Ed.081002-DA..> 19-Mar-2014 18:02 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-F28069-Picco..> 14-Jun-2014 18:14 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-F42202-PDF.htm 19-Mar-2014 18:00 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-FDS-ITW-Spra..> 14-Jun-2014 18:22 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-FICHE-DE-DON..> 10-Mar-2014 16:17 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Fastrack-Sup..> 23-Jun-2014 10:25 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Ferric-Chlor..> 29-Mar-2014 11:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Fiche-de-don..> 14-Jun-2014 09:47 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Fiche-de-don..> 14-Jun-2014 18:26 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Fluke-1730-E..> 14-Jun-2014 18:23 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-GALVA-A-FROI..> 26-Mar-2014 17:56 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-GALVA-MAT-Re..> 26-Mar-2014 17:57 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-GN-RELAYS-AG..> 20-Mar-2014 08:11 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-HC49-4H-Crys..> 14-Jun-2014 18:20 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-HFE1600-Data..> 14-Jun-2014 18:22 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-HI-70300-Sol..> 14-Jun-2014 18:27 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-HUNTSMAN-Adv..> 10-Mar-2014 16:17 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Haute-vitess..> 11-Mar-2014 08:17 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-IP4252CZ16-8..> 13-Jun-2014 18:41 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Instructions..> 19-Mar-2014 18:01 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-KSZ8851SNL-S..> 23-Jun-2014 10:28 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-L-efficacite..> 11-Mar-2014 07:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LCW-CQ7P.CC-..> 25-Mar-2014 08:19 3.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LME49725-Pow..> 14-Jun-2014 09:49 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LOCTITE-542-..> 25-Mar-2014 08:15 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LOCTITE-3463..> 25-Mar-2014 08:19 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-LUXEON-Guide..> 11-Mar-2014 07:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Leaded-Trans..> 23-Jun-2014 10:26 3.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Les-derniers..> 11-Mar-2014 07:50 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Loctite3455-..> 25-Mar-2014 08:16 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Low-cost-Enc..> 13-Jun-2014 18:42 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Lubrifiant-a..> 26-Mar-2014 18:00 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MC3510-PDF.htm 25-Mar-2014 08:17 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MC21605-PDF.htm 11-Mar-2014 08:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MCF532x-7x-E..> 29-Mar-2014 11:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MICREL-KSZ88..> 11-Mar-2014 07:54 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MICROCHIP-PI..> 19-Mar-2014 18:02 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MOLEX-39-00-..> 10-Mar-2014 17:19 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MOLEX-43020-..> 10-Mar-2014 17:21 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MOLEX-43160-..> 10-Mar-2014 17:21 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MOLEX-87439-..> 10-Mar-2014 17:21 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MPXV7002-Rev..> 20-Mar-2014 17:33 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-MX670-MX675-..> 14-Jun-2014 09:46 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Microchip-MC..> 13-Jun-2014 18:27 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Microship-PI..> 11-Mar-2014 07:53 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Midas-Active..> 14-Jun-2014 18:17 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Midas-MCCOG4..> 14-Jun-2014 18:11 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Miniature-Ci..> 26-Mar-2014 17:55 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Mistral-PDF.htm 14-Jun-2014 18:12 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Molex-83421-..> 14-Jun-2014 18:17 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Molex-COMMER..> 14-Jun-2014 18:16 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Molex-Crimp-..> 10-Mar-2014 16:27 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Multi-Functi..> 20-Mar-2014 17:38 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NTE_SEMICOND..> 11-Mar-2014 07:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-74VHC126..> 10-Mar-2014 16:17 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-BT136-60..> 11-Mar-2014 07:52 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-PBSS9110..> 10-Mar-2014 17:21 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-PCA9555 ..> 11-Mar-2014 07:54 2.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-PMBFJ620..> 10-Mar-2014 16:16 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-PSMN1R7-..> 10-Mar-2014 16:17 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-PSMN7R0-..> 10-Mar-2014 17:19 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-NXP-TEA1703T..> 11-Mar-2014 08:15 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Nilï¬-sk-E-..> 14-Jun-2014 09:47 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Novembre-201..> 20-Mar-2014 17:38 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-OMRON-Master..> 10-Mar-2014 16:26 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-OSLON-SSL-Ce..> 19-Mar-2014 18:03 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-OXPCIE958-FB..> 13-Jun-2014 18:40 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PADO-semi-au..> 04-Jul-2014 10:41 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PBSS5160T-60..> 19-Mar-2014 18:03 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PDTA143X-ser..> 20-Mar-2014 08:12 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PDTB123TT-NX..> 13-Jun-2014 18:43 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PESD5V0F1BL-..> 13-Jun-2014 18:43 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PESD9X5.0L-P..> 13-Jun-2014 18:43 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PIC12F609-61..> 04-Jul-2014 10:41 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PIC18F2455-2..> 23-Jun-2014 10:27 3.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PIC24FJ256GB..> 14-Jun-2014 09:51 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PMBT3906-PNP..> 13-Jun-2014 18:44 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PMBT4403-PNP..> 23-Jun-2014 10:27 3.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PMEG4002EL-N..> 14-Jun-2014 18:18 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-PMEG4010CEH-..> 13-Jun-2014 18:43 1.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-15..> 23-Jun-2014 10:29 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-EC..> 20-Mar-2014 17:36 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-EZ..> 20-Mar-2014 08:10 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-Id..> 20-Mar-2014 17:35 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-Ne..> 20-Mar-2014 17:36 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-Ra..> 20-Mar-2014 17:37 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-TS..> 20-Mar-2014 08:12 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Panasonic-Y3..> 20-Mar-2014 08:11 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Pico-Spox-Wi..> 10-Mar-2014 16:16 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Pompes-Charg..> 24-Apr-2014 20:23 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Ponts-RLC-po..> 14-Jun-2014 18:23 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Portable-Ana..> 29-Mar-2014 11:16 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Premier-Farn..> 21-Mar-2014 08:11 3.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Produit-3430..> 14-Jun-2014 09:48 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Proskit-SS-3..> 10-Mar-2014 16:26 1.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Puissance-ut..> 11-Mar-2014 07:49 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Q48-PDF.htm 23-Jun-2014 10:29 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Radial-Lead-..> 20-Mar-2014 08:12 2.6M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Realiser-un-..> 11-Mar-2014 07:51 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Reglement-RE..> 21-Mar-2014 08:08 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Repartiteurs..> 14-Jun-2014 18:26 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-S-TRI-SWT860..> 21-Mar-2014 08:11 3.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SB175-Connec..> 11-Mar-2014 08:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SMBJ-Transil..> 29-Mar-2014 11:12 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SOT-23-Multi..> 11-Mar-2014 07:51 2.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SPLC780A1-16..> 14-Jun-2014 18:25 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SSC7102-Micr..> 23-Jun-2014 10:25 3.2M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-SVPE-series-..> 14-Jun-2014 18:15 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Sensorless-C..> 04-Jul-2014 10:42 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Septembre-20..> 20-Mar-2014 17:46 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Serie-PicoSc..> 19-Mar-2014 18:01 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Serie-Standa..> 14-Jun-2014 18:23 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Series-2600B..> 20-Mar-2014 17:30 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Series-TDS10..> 04-Jul-2014 10:39 4.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Signal-PCB-R..> 14-Jun-2014 18:11 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Strangkuhlko..> 21-Mar-2014 08:09 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Supercapacit..> 26-Mar-2014 17:57 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TDK-Lambda-H..> 14-Jun-2014 18:21 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-TEKTRONIX-DP..> 10-Mar-2014 17:20 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Tektronix-AC..> 13-Jun-2014 18:44 1.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Telemetres-l..> 20-Mar-2014 17:46 3.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-Termometros-..> 14-Jun-2014 18:14 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-The-essentia..> 10-Mar-2014 16:27 1.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-U2270B-PDF.htm 14-Jun-2014 18:15 3.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-USB-Buccanee..> 14-Jun-2014 09:48 2.5M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-USB1T11A-PDF..> 19-Mar-2014 18:03 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-V4N-PDF.htm 14-Jun-2014 18:11 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-WetTantalum-..> 11-Mar-2014 08:14 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-XPS-AC-Octop..> 14-Jun-2014 18:11 2.1M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-XPS-MC16-XPS..> 11-Mar-2014 08:15 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-YAGEO-DATA-S..> 11-Mar-2014 08:13 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ZigBee-ou-le..> 11-Mar-2014 07:50 2.4M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-celpac-SUL84..> 21-Mar-2014 08:11 3.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-china_rohs_o..> 21-Mar-2014 10:04 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-cree-Xlamp-X..> 20-Mar-2014 17:34 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-cree-Xlamp-X..> 20-Mar-2014 17:35 2.7M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-cree-Xlamp-X..> 20-Mar-2014 17:31 2.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-cree-Xlamp-m..> 20-Mar-2014 17:32 2.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-cree-Xlamp-m..> 20-Mar-2014 17:32 2.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-ir1150s_fr.p..> 29-Mar-2014 11:11 3.3M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-manual-bus-p..> 10-Mar-2014 16:29 1.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-propose-plus..> 11-Mar-2014 08:19 2.8M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-techfirst_se..> 21-Mar-2014 08:08 3.9M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-testo-205-20..> 20-Mar-2014 17:37 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-testo-470-Fo..> 20-Mar-2014 17:38 3.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Farnell-uC-OS-III-Br..> 10-Mar-2014 17:20 2.0M

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-7866HD.pdf-PD..> 29-Mar-2014 11:46 472K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-CAT_ENREGISTR..> 29-Mar-2014 11:46 461K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-CAT_MESUREURS..> 29-Mar-2014 11:46 435K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-GUIDE_SIMPLIF..> 29-Mar-2014 11:46 481K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-GUIDE_SIMPLIF..> 29-Mar-2014 11:46 442K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-GUIDE_SIMPLIF..> 29-Mar-2014 11:46 422K

![[TXT]](http://www.audentia-gestion.fr/icons/text.gif)

Sefram-SP270.pdf-PDF..> 29-Mar-2014 11:46 464K

CHAPTER

12 The Fast Fourier Transform

There are several ways to calculate the Discrete Fourier Transform (DFT), such as solving

simultaneous linear equations or the correlation method described in Chapter 8. The Fast

Fourier Transform (FFT) is another method for calculating the DFT. While it produces the same

result as the other approaches, it is incredibly more efficient, often reducing the computation time

by hundreds. This is the same improvement as flying in a jet aircraft versus walking! If the

FFT were not available, many of the techniques described in this book would not be practical.

While the FFT only requires a few dozen lines of code, it is one of the most complicated

algorithms in DSP. But don't despair! You can easily use published FFT routines without fully

understanding the internal workings.

Real DFT Using the Complex DFT

J.W. Cooley and J.W. Tukey are given credit for bringing the FFT to the world

in their paper: "An algorithm for the machine calculation of complex Fourier

Series," Mathematics Computation, Vol. 19, 1965, pp 297-301. In retrospect,

others had discovered the technique many years before. For instance, the great

German mathematician Karl Friedrich Gauss (1777-1855) had used the method

more than a century earlier. This early work was largely forgotten because it

lacked the tool to make it practical: the digital computer. Cooley and Tukey

are honored because they discovered the FFT at the right time, the beginning

of the computer revolution.

The FFT is based on the complex DFT, a more sophisticated version of the real

DFT discussed in the last four chapters. These transforms are named for the

way each represents data, that is, using complex numbers or using real

numbers. The term complex does not mean that this representation is difficult

or complicated, but that a specific type of mathematics is used. Complex

mathematics often is difficult and complicated, but that isn't where the name

comes from. Chapter 29 discusses the complex DFT and provides the

background needed to understand the details of the FFT algorithm. The

226 The Scientist and Engineer's Guide to Digital Signal Processing

FIGURE 12-1

Comparing the real and complex DFTs. The real DFT takes an N point time domain signal and

creates two N/2% 1 point frequency domain signals. The complex DFT takes two N point time

domain signals and creates two N point frequency domain signals. The crosshatched regions shows

the values common to the two transforms.

Real DFT

Complex DFT

Time Domain

Time Domain

Frequency Domain

Frequency Domain

0 N-1

0 N-1

0 N-1

0 N/2

0 N/2

0

0

N-1

N-1

N/2

N/2

Real Part

Imaginary Part

Real Part

Imaginary Part

Real Part

Imaginary Part

Time Domain Signal

topic of this chapter is simpler: how to use the FFT to calculate the real DFT,

without drowning in a mire of advanced mathematics.

Since the FFT is an algorithm for calculating the complex DFT, it is

important to understand how to transfer real DFT data into and out of the

complex DFT format. Figure 12-1 compares how the real DFT and the

complex DFT store data. The real DFT transforms an N point time domain

signal into two N/2 % 1 point frequency domain signals. The time domain

signal is called just that: the time domain signal. The two signals in the

frequency domain are called the real part and the imaginary part, holding

the amplitudes of the cosine waves and sine waves, respectively. This

should be very familiar from past chapters.

In comparison, the complex DFT transforms two N point time domain signals

into two N point frequency domain signals. The two time domain signals are

called the real part and the imaginary part, just as are the frequency domain

signals. In spite of their names, all of the values in these arrays are just

ordinary numbers. (If you are familiar with complex numbers: the j's are not

included in the array values; they are a part of the mathematics. Recall that the

operator, Im( ), returns a real number).

Chapter 12- The Fast Fourier Transform 227

6000 'NEGATIVE FREQUENCY GENERATION

6010 'This subroutine creates the complex frequency domain from the real frequency domain.

6020 'Upon entry to this subroutine, N% contains the number of points in the signals, and

6030 'REX[ ] and IMX[ ] contain the real frequency domain in samples 0 to N%/2.

6040 'On return, REX[ ] and IMX[ ] contain the complex frequency domain in samples 0 to N%-1.

6050 '

6060 FOR K% = (N%/2+1) TO (N%-1)

6070 REX[K%] = REX[N%-K%]

6080 IMX[K%] = -IMX[N%-K%]

6090 NEXT K%

6100 '

6110 RETURN

TABLE 12-1

Suppose you have an N point signal, and need to calculate the real DFT by

means of the Complex DFT (such as by using the FFT algorithm). First, move

the N point signal into the real part of the complex DFT's time domain, and

then set all of the samples in the imaginary part to zero. Calculation of the

complex DFT results in a real and an imaginary signal in the frequency

domain, each composed of N points. Samples 0 through N/2 of these signals

correspond to the real DFT's spectrum.

As discussed in Chapter 10, the DFT's frequency domain is periodic when the

negative frequencies are included (see Fig. 10-9). The choice of a single

period is arbitrary; it can be chosen between -1.0 and 0, -0.5 and 0.5, 0 and

1.0, or any other one unit interval referenced to the sampling rate. The

complex DFT's frequency spectrum includes the negative frequencies in the 0

to 1.0 arrangement. In other words, one full period stretches from sample 0 to

sample N&1 , corresponding with 0 to 1.0 times the sampling rate. The positive

frequencies sit between sample 0 and N/2 , corresponding with 0 to 0.5. The

other samples, between N/2% 1 and N&1 , contain the negative frequency

values (which are usually ignored).

Calculating a real Inverse DFT using a complex Inverse DFT is slightly

harder. This is because you need to insure that the negative frequencies are

loaded in the proper format. Remember, points 0 through N/2 in the

complex DFT are the same as in the real DFT, for both the real and the

imaginary parts. For the real part, point N/2% 1 is the same as point

N/2& 1 , point N/2% 2 is the same as point N/2& 2 , etc. This continues to

point N&1 being the same as point 1. The same basic pattern is used for

the imaginary part, except the sign is changed. That is, point N/2% 1 is the

negative of point N/2& 1 , point N/2% 2 is the negative of point N/2& 2 , etc.

Notice that samples 0 and N/2 do not have a matching point in this

duplication scheme. Use Fig. 10-9 as a guide to understanding this

symmetry. In practice, you load the real DFT's frequency spectrum into

samples 0 to N/2 of the complex DFT's arrays, and then use a subroutine to

generate the negative frequencies between samples N/2% 1 and N&1 . Table

12-1 shows such a program. To check that the proper symmetry is present,

after taking the inverse FFT, look at the imaginary part of the time domain.

It will contain all zeros if everything is correct (except for a few parts-permillion

of noise, using single precision calculations).

228 The Scientist and Engineer's Guide to Digital Signal Processing

FIGURE 12-2

The FFT decomposition. An N point signal is decomposed into N signals each containing a single point.

Each stage uses an interlace decomposition, separating the even and odd numbered samples.

1 signal of

16 points

2 signals of

8 points

4 signals of

4 points

8 signals of

2 points

16 signals of

1 point

0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

0 2 4 6 8 10 12 14 1 3 5 7 9 11 13 15

0 4 8 12 2 6 10 14 1 5 9 13 3 7 11 15

0 8 4 12 2 10 6 14 1 9 5 13 3 11 7 15

0 8 4 12 2 10 6 14 1 9 5 13 3 11 7 15

How the FFT works

The FFT is a complicated algorithm, and its details are usually left to those that

specialize in such things. This section describes the general operation of the

FFT, but skirts a key issue: the use of complex numbers. If you have a

background in complex mathematics, you can read between the lines to

understand the true nature of the algorithm. Don't worry if the details elude

you; few scientists and engineers that use the FFT could write the program

from scratch.

In complex notation, the time and frequency domains each contain one signal

made up of N complex points. Each of these complex points is composed of

two numbers, the real part and the imaginary part. For example, when we talk

about complex sample X[42] , it refers to the combination of ReX[42] and

ImX[42]. In other words, each complex variable holds two numbers. When

two complex variables are multiplied, the four individual components must be

combined to form the two components of the product (such as in Eq. 9-1). The

following discussion on "How the FFT works" uses this jargon of complex

notation. That is, the singular terms: signal, point, sample, and value, refer

to the combination of the real part and the imaginary part.

The FFT operates by decomposing an N point time domain signal into N

time domain signals each composed of a single point. The second step is to

calculate the N frequency spectra corresponding to these N time domain

signals. Lastly, the N spectra are synthesized into a single frequency

spectrum.

Figure 12-2 shows an example of the time domain decomposition used in the

FFT. In this example, a 16 point signal is decomposed through four

Chapter 12- The Fast Fourier Transform 229

Sample numbers Sample numbers

in normal order after bit reversal

Decimal Binary Decimal Binary

0 0000 0 0000

1 0001 8 1000

2 0010 4 0100

3 0011 12 1100

4 0100 2 0010

5 0101 10 1010

6 0110 6 0100

7 0111 14 1110

8 1000 1 0001

9 1001 9 1001

10 1010 5 0101

11 1011 13 1101

12 1100 3 0011

13 1101 11 1011

14 1110 7 0111

15 1111 15 1111

FIGURE 12-3

The FFT bit reversal sorting. The FFT time domain decomposition can be implemented by

sorting the samples according to bit reversed order.

separate stages. The first stage breaks the 16 point signal into two signals each

consisting of 8 points. The second stage decomposes the data into four signals

of 4 points. This pattern continues until there are N signals composed of a

single point. An interlaced decomposition is used each time a signal is

broken in two, that is, the signal is separated into its even and odd numbered

samples. The best way to understand this is by inspecting Fig. 12-2 until you

grasp the pattern. There are Log stages required in this decomposition, i.e., 2N

a 16 point signal (24) requires 4 stages, a 512 point signal (27) requires 7

stages, a 4096 point signal (212) requires 12 stages, etc. Remember this value,

Log ; it will be referenced many times in this chapter. 2N

Now that you understand the structure of the decomposition, it can be greatly

simplified. The decomposition is nothing more than a reordering of the

samples in the signal. Figure 12-3 shows the rearrangement pattern required.

On the left, the sample numbers of the original signal are listed along with

their binary equivalents. On the right, the rearranged sample numbers are

listed, also along with their binary equivalents. The important idea is that the

binary numbers are the reversals of each other. For example, sample 3 (0011)

is exchanged with sample number 12 (1100). Likewise, sample number 14

(1110) is swapped with sample number 7 (0111), and so forth. The FFT time

domain decomposition is usually carried out by a bit reversal sorting

algorithm. This involves rearranging the order of the N time domain samples

by counting in binary with the bits flipped left-for-right (such as in the far right

column in Fig. 12-3).

230 The Scientist and Engineer's Guide to Digital Signal Processing

a b c d

a 0 b 0 c 0 d 0

A B C D

A B C D A B C D

e f g h

0 e 0 f 0 g 0 h

E F G H

F G H E F G H

× sinusoid

Time Domain Frequency Domain

E

FIGURE 12-4

The FFT synthesis. When a time domain signal is diluted with zeros, the frequency domain is

duplicated. If the time domain signal is also shifted by one sample during the dilution, the spectrum

will additionally be multiplied by a sinusoid.

The next step in the FFT algorithm is to find the frequency spectra of the

1 point time domain signals. Nothing could be easier; the frequency

spectrum of a 1 point signal is equal to itself. This means that nothing is

required to do this step. Although there is no work involved, don't forget

that each of the 1 point signals is now a frequency spectrum, and not a time

domain signal.

The last step in the FFT is to combine the N frequency spectra in the exact

reverse order that the time domain decomposition took place. This is where the

algorithm gets messy. Unfortunately, the bit reversal shortcut is not

applicable, and we must go back one stage at a time. In the first stage, 16

frequency spectra (1 point each) are synthesized into 8 frequency spectra (2

points each). In the second stage, the 8 frequency spectra (2 points each) are

synthesized into 4 frequency spectra (4 points each), and so on. The last stage

results in the output of the FFT, a 16 point frequency spectrum.

Figure 12-4 shows how two frequency spectra, each composed of 4 points,

are combined into a single frequency spectrum of 8 points. This synthesis

must undo the interlaced decomposition done in the time domain. In other

words, the frequency domain operation must correspond to the time domain

procedure of combining two 4 point signals by interlacing. Consider two

time domain signals, abcd and efgh. An 8 point time domain signal can be

formed by two steps: dilute each 4 point signal with zeros to make it an

Chapter 12- The Fast Fourier Transform 231

+ + + + + + + +

Eight Point Frequency Spectrum

Odd- Four Point

Frequency Spectrum

Even- Four Point

Frequency Spectrum

xS xS xS xS

FIGURE 12-5

FFT synthesis flow diagram. This shows

the method of combining two 4 point

frequency spectra into a single 8 point

frequency spectrum. The ×S operation

means that the signal is multiplied by a

sinusoid with an appropriately selected

frequency.

2 point input

2 point output

xS

FIGURE 12-6

The FFT butterfly. This is the basic

calculation element in the FFT, taking

two complex points and converting

them into two other complex points.

8 point signal, and then add the signals together. That is, abcd becomes

a0b0c0d0, and efgh becomes 0e0f0g0h. Adding these two 8 point signals

produces aebfcgdh. As shown in Fig. 12-4, diluting the time domain with zeros

corresponds to a duplication of the frequency spectrum. Therefore, the

frequency spectra are combined in the FFT by duplicating them, and then

adding the duplicated spectra together.

In order to match up when added, the two time domain signals are diluted with

zeros in a slightly different way. In one signal, the odd points are zero, while

in the other signal, the even points are zero. In other words, one of the time

domain signals (0e0f0g0h in Fig. 12-4) is shifted to the right by one sample.

This time domain shift corresponds to multiplying the spectrum by a sinusoid.

To see this, recall that a shift in the time domain is equivalent to convolving

the signal with a shifted delta function. This multiplies the signal's spectrum

with the spectrum of the shifted delta function. The spectrum of a shifted delta

function is a sinusoid (see Fig 11-2).

Figure 12-5 shows a flow diagram for combining two 4 point spectra into a

single 8 point spectrum. To reduce the situation even more, notice that Fig. 12-

5 is formed from the basic pattern in Fig 12-6 repeated over and over.

232 The Scientist and Engineer's Guide to Digital Signal Processing

Time Domain Data

Frequency Domain Data

Bit Reversal

Data Sorting

Overhead

Overhead

Calculation

Decomposition

Synthesis

Time

Domain

Frequency

Domain

Butterfly

FIGURE 12-7

Flow diagram of the FFT. This is based

on three steps: (1) decompose an N point

time domain signal into N signals each

containing a single point, (2) find the

spectrum of each of the N point signals

(nothing required), and (3) synthesize the

N frequency spectra into a single

frequency spectrum.

Loop for each Butterfly

Loop for Leach sub-DFT

Loop for Log2N stages

This simple flow diagram is called a butterfly due to its winged appearance.

The butterfly is the basic computational element of the FFT, transforming two

complex points into two other complex points.

Figure 12-7 shows the structure of the entire FFT. The time domain

decomposition is accomplished with a bit reversal sorting algorithm.

Transforming the decomposed data into the frequency domain involves nothing

and therefore does not appear in the figure.

The frequency domain synthesis requires three loops. The outer loop runs

through the Log stages (i.e., each level in Fig. 12-2, starting from the bottom 2N

and moving to the top). The middle loop moves through each of the individual

frequency spectra in the stage being worked on (i.e., each of the boxes on any

one level in Fig. 12-2). The innermost loop uses the butterfly to calculate the

points in each frequency spectra (i.e., looping through the samples inside any

one box in Fig. 12-2). The overhead boxes in Fig. 12-7 determine the

beginning and ending indexes for the loops, as well as calculating the sinusoids

needed in the butterflies. Now we come to the heart of this chapter, the actual

FFT programs.

Chapter 12- The Fast Fourier Transform 233

5000 'COMPLEX DFT BY CORRELATION

5010 'Upon entry, N% contains the number of points in the DFT, and

5020 'XR[ ] and XI[ ] contain the real and imaginary parts of the time domain.

5030 'Upon return, REX[ ] and IMX[ ] contain the frequency domain data.

5040 'All signals run from 0 to N%-1.

5050 '

5060 PI = 3.14159265 'Set constants

5070 '

5080 FOR K% = 0 TO N%-1 'Zero REX[ ] and IMX[ ], so they can be used

5090 REX[K%] = 0 'as accumulators during the correlation

5100 IMX[K%] = 0

5110 NEXT K%

5120 '

5130 FOR K% = 0 TO N%-1 'Loop for each value in frequency domain

5140 FOR I% = 0 TO N%-1 'Correlate with the complex sinusoid, SR & SI

5150 '

5160 SR = COS(2*PI*K%*I%/N%) 'Calculate complex sinusoid

5170 SI = -SIN(2*PI*K%*I%/N%)

5180 REX[K%] = REX[K%] + XR[I%]*SR - XI[I%]*SI

5190 IMX[K%] = IMX[K%] + XR[I%]*SI + XI[I%]*SR

5200 '

5210 NEXT I%

5220 NEXT K%

5230 '

5240 RETURN

TABLE 12-2

FFT Programs

As discussed in Chapter 8, the real DFT can be calculated by correlating

the time domain signal with sine and cosine waves (see Table 8-2). Table

12-2 shows a program to calculate the complex DFT by the same method.

In an apples-to-apples comparison, this is the program that the FFT

improves upon.

Tables 12-3 and 12-4 show two different FFT programs, one in FORTRAN and

one in BASIC. First we will look at the BASIC routine in Table 12-4. This

subroutine produces exactly the same output as the correlation technique in

Table 12-2, except it does it much faster. The block diagram in Fig. 12-7 can

be used to identify the different sections of this program. Data are passed to

this FFT subroutine in the arrays: REX[ ] and IMX[ ], each running from

sample 0 to N&1 . Upon return from the subroutine, REX[ ] and IMX[ ] are

overwritten with the frequency domain data. This is another way that the FFT

is highly optimized; the same arrays are used for the input, intermediate

storage, and output. This efficient use of memory is important for designing

fast hardware to calculate the FFT. The term in-place computation is used

to describe this memory usage.

While all FFT programs produce the same numerical result, there are subtle

variations in programming that you need to look out for. Several of these

234 The Scientist and Engineer's Guide to Digital Signal Processing

TABLE 12-3

The Fast Fourier Transform in FORTRAN.

Data are passed to this subroutine in the

variables X( ) and M. The integer, M, is the

base two logarithm of the length of the DFT,

i.e., M = 8 for a 256 point DFT, M = 12 for a

4096 point DFT, etc. The complex array, X( ),

holds the time domain data upon entering the

DFT. Upon return from this subroutine, X( ) is

overwritten with the frequency domain data.

Take note: this subroutine requires that the

input and output signals run from X(1) through

X(N), rather than the customary X(0) through

X(N-1).

SUBROUTINE FFT(X,M)

COMPLEX X(4096),U,S,T

PI=3.14159265

N=2**M

DO 20 L=1,M

LE=2**(M+1-L)

LE2=LE/2

U=(1.0,0.0)

S=CMPLX(COS(PI/FLOAT(LE2)),-SIN(PI/FLOAT(LE2)))

DO 20 J=1,LE2

DO 10 I=J,N,LE

IP=I+LE2

T=X(I)+X(IP)

X(IP)=(X(I)-X(IP))*U

10 X(I)=T

20 U=U*S

ND2=N/2

NM1=N-1

J=1

DO 50 I=1,NM1

IF(I.GE.J) GO TO 30

T=X(J)

X(J)=X(I)

X(I)=T

30 K=ND2

40 IF(K.GE.J) GO TO 50

J=J-K

K=K/2

GO TO 40

50 J=J+K

RETURN

END

of these differences are illustrated by the FORTRAN program listed in Table

12-3. This program uses an algorithm called decimation in frequency, while

the previously described algorithm is called decimation in time. In a

decimation in frequency algorithm, the bit reversal sorting is done after the

three nested loops. There are also FFT routines that completely eliminate the

bit reversal sorting. None of these variations significantly improve the

performance of the FFT, and you shouldn't worry about which one you are

using.

The important differences between FFT algorithms concern how data are

passed to and from the subroutines. In the BASIC program, data enter and

leave the subroutine in the arrays REX[ ] and IMX[ ], with the samples

running from index 0 to N&1 . In the FORTRAN program, data are passed

in the complex array X( ), with the samples running from 1 to N. Since this

is an array of complex variables, each sample in X( ) consists of two

numbers, a real part and an imaginary part. The length of the DFT must

also be passed to these subroutines. In the BASIC program, the variable

N% is used for this purpose. In comparison, the FORTRAN program uses

the variable M, which is defined to equal Log . For instance, M will be 2N

Chapter 12- The Fast Fourier Transform 235

TABLE 12-4

The Fast Fourier Transform in BASIC.

1000 'THE FAST FOURIER TRANSFORM

1010 'Upon entry, N% contains the number of points in the DFT, REX[ ] and

1020 'IMX[ ] contain the real and imaginary parts of the input. Upon return,

1030 'REX[ ] and IMX[ ] contain the DFT output. All signals run from 0 to N%-1.

1040 '

1050 PI = 3.14159265 'Set constants

1060 NM1% = N%-1

1070 ND2% = N%/2

1080 M% = CINT(LOG(N%)/LOG(2))

1090 J% = ND2%

1100 '

1110 FOR I% = 1 TO N%-2 'Bit reversal sorting

1120 IF I% >= J% THEN GOTO 1190

1130 TR = REX[J%]

1140 TI = IMX[J%]

1150 REX[J%] = REX[I%]

1160 IMX[J%] = IMX[I%]

1170 REX[I%] = TR

1180 IMX[I%] = TI

1190 K% = ND2%

1200 IF K% > J% THEN GOTO 1240

1210 J% = J%-K%

1220 K% = K%/2

1230 GOTO 1200

1240 J% = J%+K%

1250 NEXT I%

1260 '

1270 FOR L% = 1 TO M% 'Loop for each stage

1280 LE% = CINT(2^L%)

1290 LE2% = LE%/2

1300 UR = 1

1310 UI = 0

1320 SR = COS(PI/LE2%) 'Calculate sine & cosine values

1330 SI = -SIN(PI/LE2%)

1340 FOR J% = 1 TO LE2% 'Loop for each sub DFT

1350 JM1% = J%-1

1360 FOR I% = JM1% TO NM1% STEP LE% 'Loop for each butterfly

1370 IP% = I%+LE2%

1380 TR = REX[IP%]*UR - IMX[IP%]*UI 'Butterfly calculation

1390 TI = REX[IP%]*UI + IMX[IP%]*UR

1400 REX[IP%] = REX[I%]-TR

1410 IMX[IP%] = IMX[I%]-TI

1420 REX[I%] = REX[I%]+TR

1430 IMX[I%] = IMX[I%]+TI

1440 NEXT I%

1450 TR = UR

1460 UR = TR*SR - UI*SI

1470 UI = TR*SI + UI*SR

1480 NEXT J%

1490 NEXT L%

1500 '

1510 RETURN

236 The Scientist and Engineer's Guide to Digital Signal Processing

2000 'INVERSE FAST FOURIER TRANSFORM SUBROUTINE

2010 'Upon entry, N% contains the number of points in the IDFT, REX[ ] and

2020 'IMX[ ] contain the real and imaginary parts of the complex frequency domain.

2030 'Upon return, REX[ ] and IMX[ ] contain the complex time domain.

2040 'All signals run from 0 to N%-1.

2050 '

2060 FOR K% = 0 TO N%-1 'Change the sign of IMX[ ]

2070 IMX[K%] = -IMX[K%]

2080 NEXT K%

2090 '

2100 GOSUB 1000 'Calculate forward FFT (Table 12-3)

2110 '

2120 FOR I% = 0 TO N%-1 'Divide the time domain by N% and

2130 REX[I%] = REX[I%]/N% 'change the sign of IMX[ ]

2140 IMX[I%] = -IMX[I%]/N%

2150 NEXT I%

2160 '

2170 RETURN

TABLE 12-5

8 for a 256 point DFT, 12 for a 4096 point DFT, etc. The point is, the

programmer who writes an FFT subroutine has many options for interfacing

with the host program. Arrays that run from 1 to N, such as in the

FORTRAN program, are especially aggravating. Most of the DSP literature

(including this book) explains algorithms assuming the arrays run from